Insights & News

The latest insights and perspectives from our team

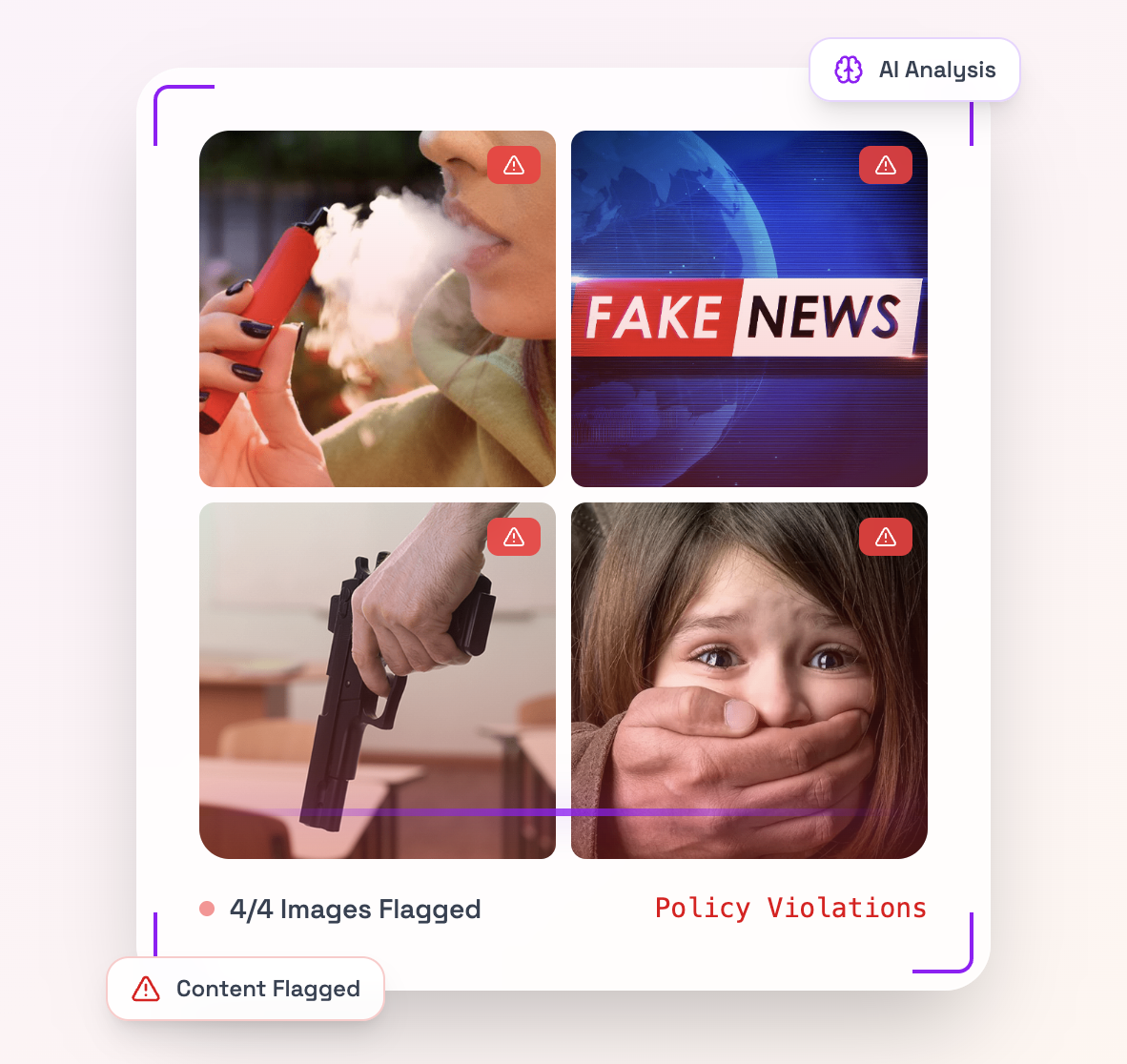

AI Content Moderation with Detector24: A Comprehensive Guide to Moderation Models

In today’s digital landscape, content moderation is essential for maintaining safe and positive online communities. Every minute, users upload an enormous volume of content – for example, more than 500 hours of video are added to YouTube each minute.

AI Content Moderation: Technical Guide to Detector24’s Text Moderation Models

Build safer communities with AI Content Moderation. Learn how Detector24’s text moderation models detect scams, PII leaks, misinformation, AI‑generated text, mental‑health crisis signals, and sentiment—plus practical workflow tips.

Python C2PA Tutorial: Verifying Image Authenticity and Detecting Tampering

In today's digital landscape, AI-generated and manipulated images are becoming increasingly sophisticated and widespread. This raises critical questions about authenticity and trust: How can we verify that an image is genuine and detect if it has been tampered with?

AI Content Moderation (Audio & Video) with Detector24 Models

Learn how AI Content Moderation works for audio and video, and how Detector24’s moderation models help moderators detect deepfakes, unsafe speech, violence, and more.

AI Voice & Music Detection: Stopping Synthetic Audio Threats

Learn how AI voice and music detection helps stop deepfake audio, fraud, impersonation, and copyright abuse in a world of synthetic sound.

AI Content Moderation for Safer Online Communities

Learn how AI content moderation protects online communities at scale—detecting hate, abuse, spam, and deepfakes in real time with human oversight.

How to Spot AI-Generated Images: Detecting Content from Midjourney, DALL·E & Flux

Learn how to detect AI-generated images from Midjourney, DALL·E and Flux using visual, technical and behavioral signals in 2026.

Deepfakes in 2026: Why Detection Matters More Than Ever

Deepfakes are reshaping fraud in 2026. Learn why real-time, AI-powered detection is now critical for identity trust, compliance, and digital security.