Detecting AI Content: How to Spot AI-Generated Images from Midjourney, DALL·E & Flux

Why AI-Generated Images Are Harder to Spot Than Ever

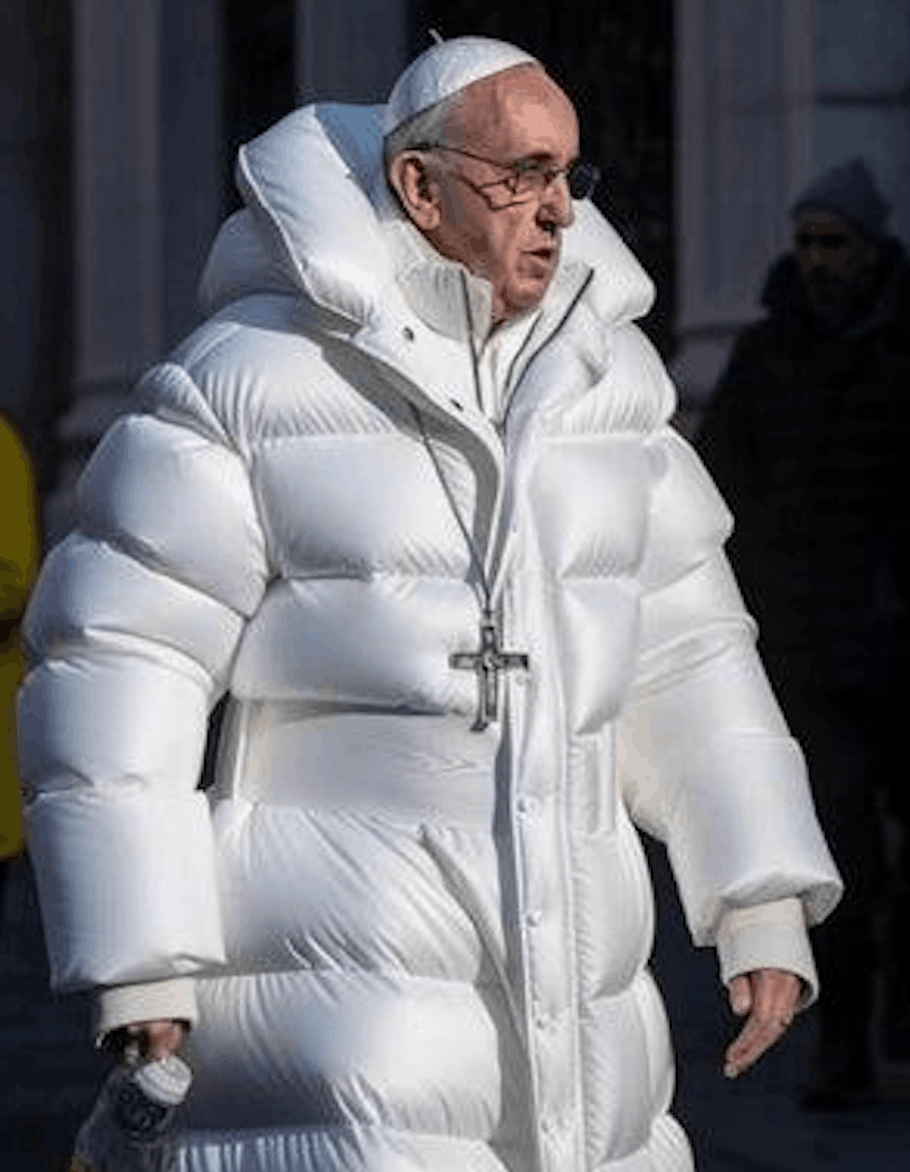

AI image generators have advanced at a shocking pace. Gone are the days of glitchy, obviously fake outputs – today’s synthetic images can look indistinguishable from real photographs. In fact, modern models like Midjourney v6 and DALL·E 3 produce photorealistic results that even tech-savvy viewers only identify correctly about half the time. DALL·E’s advances have made ai content detection more challenging, as distinguishing between real and AI-generated images is increasingly difficult. Realism, however, does not equal authenticity. An image can appear perfectly genuine and still be entirely fabricated by AI.

Relying on human intuition alone is increasingly risky. For example, a fact-checker in 2025 confidently declared a fake image authentic simply because the subject’s hand had five fingers – a once-sure tell that no longer holds true. With AI improvements, our gut feelings can be fooled. This has real business impact: sophisticated fraudsters now use AI to generate fake profile photos, bogus product listings, and even counterfeit “evidence” images. From impersonation scams to fake apartment listings, AI visuals are being weaponized for profit. Fake images are also being used in legal contexts, making it crucial for legal professionals to use Fake Image Detector tools to verify the authenticity of visual evidence and ensure reliability in court proceedings. AI-generated images can be used to create fake news and harm democratic processes. In a world where seeing is no longer believing, digital trust must be rebuilt from the ground up. Companies can’t afford to assume an image is real just because it looks real.

As AI content detection technology evolves to address these challenges across multiple languages and global contexts, journalists must also develop new standards for verifying audiovisual evidence in an era when anyone can create convincing AI-generated content.

The New Generation of Image Models (What You’re Up Against)

The latest image-generation tools – Midjourney, OpenAI’s DALL·E, Black Forest Labs’ Flux, and others – are far more advanced than early AI art models. These systems use diffusion and transformer architectures that understand prompts with uncanny accuracy and produce high-fidelity images in any style. They can be “prompt-conditioned” to follow detailed instructions (for example, “generate a photo of a courtroom in 1970s newsprint style”) and even mimic specific camera lenses or artists. Unlike older GAN-based generators, today’s diffusion models excel at photorealism and high-resolution output with built-in upscaling. In one test, Flux 1.x models achieved prompt fidelity on par with DALL·E 3 and photorealism comparable to Midjourney v6 – even rendering human hands consistently, a feat earlier models struggled with. DALL·E’s advances in text and image quality have made it significantly more difficult for detection tools to distinguish between real photos and AI-generated images, raising new challenges for misinformation detection and forensic analysis. In short, AI images in 2026 can be as crisp and coherent as a digital photo.

Traditional tells like watermarks or metadata are no longer reliable. Some generators do add hidden markers (OpenAI began embedding invisible C2PA metadata and a small “CR” watermark in DALL·E 3 images). However, these indicators are easily lost – a simple crop, screenshot, or re-save will strip out provenance data. Many popular AI image tools (and virtually all open-source models) add no obvious watermark at all, or can be run in ways that omit them. You certainly can’t count on bad actors to voluntarily disclose “AI-generated” in an image’s metadata or caption. Because watermarks and metadata can be so easily removed, it is increasingly important to use advanced detection models to verify originality, ensuring the authenticity and uniqueness of content even across different languages and contexts.

Visual Red Flags: What Humans Can Still Catch (Sometimes)

Even as AI visuals become more convincing, there are still a few visual red flags a sharp-eyed human might catch – emphasis on might. These include some classic anomalies (now more subtle than before):

- Anatomical Oddities: AI has mostly conquered the “six-fingered hand” problem, but subtle anatomy errors persist. Look closely at hands, ears, and teeth. Fingers might be fused or positioned oddly; teeth may appear too uniform, or ears might be mismatched. Background humans in a crowd are often a giveaway – AI may render them with jumbled or “melting” fingers and facial features. These quirks are harder to spot than in 2024, yet an unnatural pose or a limb that defies anatomy can still slip through.

- Unrealistic Lighting or Texture: Does the lighting make sense across the scene? AI sometimes produces inconsistent shadows or reflections – for instance, shadows falling in impossible directions, or a mirror reflection that doesn’t match the subject. Textures can also look off: overly smooth skin with no pores (as if airbrushed), or bizarrely exaggerated details. An image might have an unnatural combination of softness and sharpness – for example, a background that’s too blurred next to a subject that’s too crisp. Such subtle lighting and depth-of-field inconsistencies are artifacts of generation.

- Distorted Text and Symbols: Although AI’s text rendering has improved, it’s still worth scrutinizing any writing in the scene. Signs, labels, or posters within an image might contain gibberish or characters that don’t quite form real words. In early AI images, nonsense text (e.g. “FREEE PALESTIME” instead of Palestine) was a sure sign. Current models like DALL·E 3 specifically trained to produce legible text, so this clue is far less common. Still, if visible text looks subtly distorted or the font style is inconsistent, you may be looking at an AI creation.

When examining images, analyzing specific elements—such as lighting, anatomy, and text—can help identify inconsistencies that may indicate AI generation.

Importantly, these red flags are becoming increasingly subtle – and unreliable as indicators. Major models have dramatically improved on prior failings: by 2025, Midjourney and DALL·E could render hands and English text correctly in most cases. Misaligned eyes or “painted-on” teeth in portraits, once telltale signs, are now rare. Conversely, real photographs can sometimes exhibit odd lighting or blur effects due to lenses and filters. This makes verifying whether images are real photos or AI-generated media more important than ever, but both AI detectors and human judgment have limitations and can be fooled by sophisticated synthetic content.

Behavioral Context: The Missing Piece in Image Verification

When assessing an image’s authenticity, the image alone is rarely enough. Contextual and behavioral signals surrounding that image can be the deciding factor in verification. Think of it this way: a single profile photo might look perfectly real, but what if you knew that the account was just created yesterday, has zero other photos, and the same picture appears on three different “sock puppet” profiles? Such behavioral context strongly suggests something’s amiss – likely an AI-generated persona. Using contextual clues is essential to identify ai generated images, fake profiles, and other synthetic content that may be used for deception.

For businesses and platforms, analyzing how and where an image is used is as important as inspecting the pixels. Upload patterns, timing, and reuse can offer major clues. For example, if dozens of new listings on a marketplace all use images flagged as AI and all come from accounts with similar sign-up details, you may be dealing with a coordinated fraud ring generating fake product photos. Timing matters too: a viral “news” image that an unknown account posts before any reputable source could indicate a proactive deepfake campaign. Repetition is another flag – scammers often reuse the same AI-generated pictures across multiple ads or profiles. Reverse image searches or cross-platform scans might show that a supposedly unique profile photo appears under different names, which is a strong indicator of inauthentic content. AI generated avatars are increasingly used for malicious purposes such as impersonation scams and fake profiles, making detection tools critical to protect users from impersonation and manipulation.

Crucially, AI images are often one component of larger fraud or manipulation workflows. A fake image usually isn’t an isolated prank; it’s part of some scheme – be it a phony identity, a fraudulent listing, or a misinformation campaign. Detecting and preventing the spread of misleading content created with AI-generated images is essential to limit the impact of misinformation campaigns. So, tie your image analysis to other identity and risk signals. In identity verification (KYC/KYB) scenarios, for instance, an AI-generated selfie might come alongside a counterfeit ID document; spotting one should trigger scrutiny of the other. Connecting these dots – image authenticity, user account history, device fingerprints, transaction patterns, etc. – gives a much fuller risk profile. A synthetic image uploaded from a known high-risk IP address, or by a user with prior fraud flags, should heighten suspicion far more than the same image from a long-established, verified user.

Preventing Fake Profiles and IDs: The Role of AI Image Detection

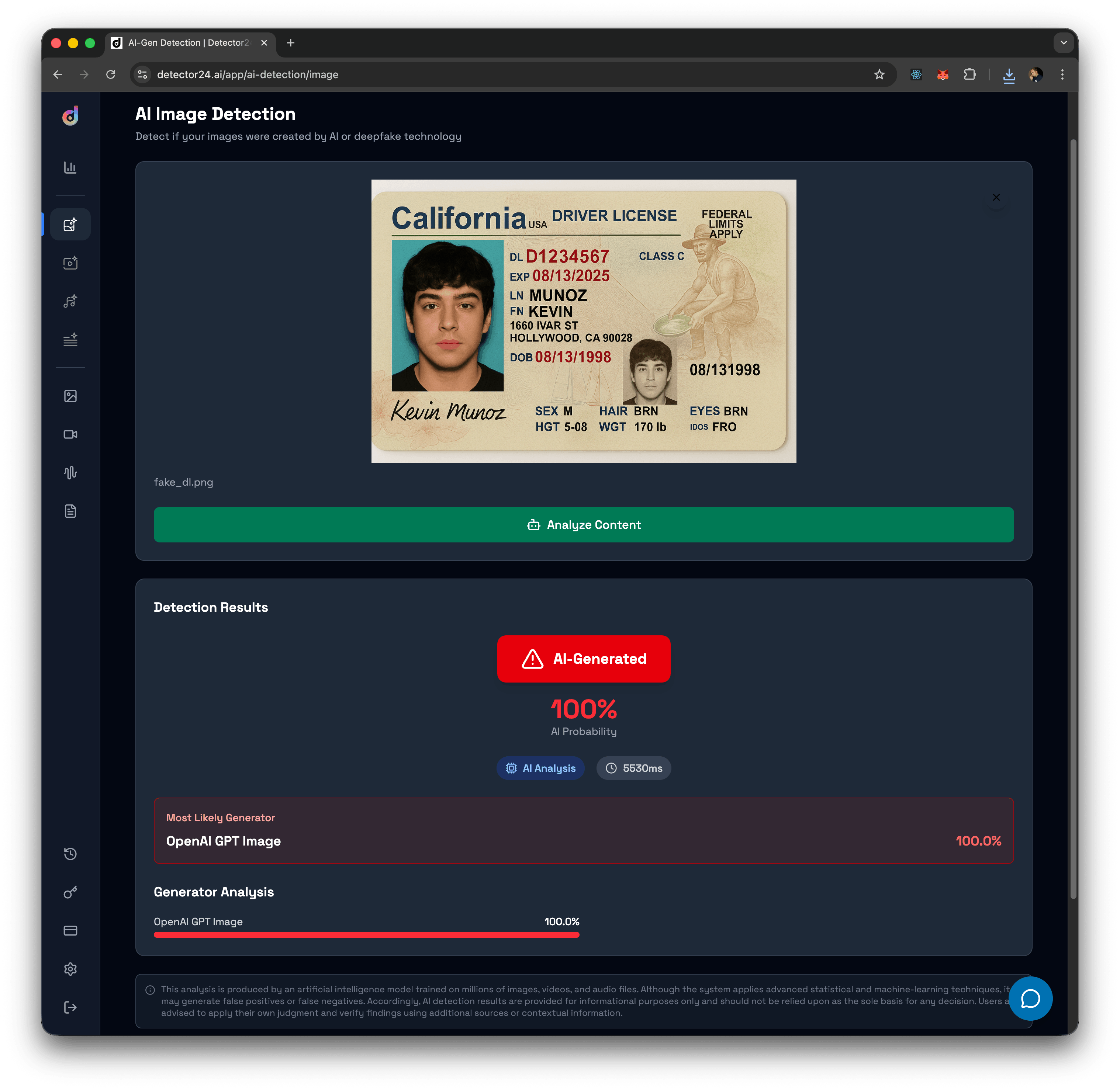

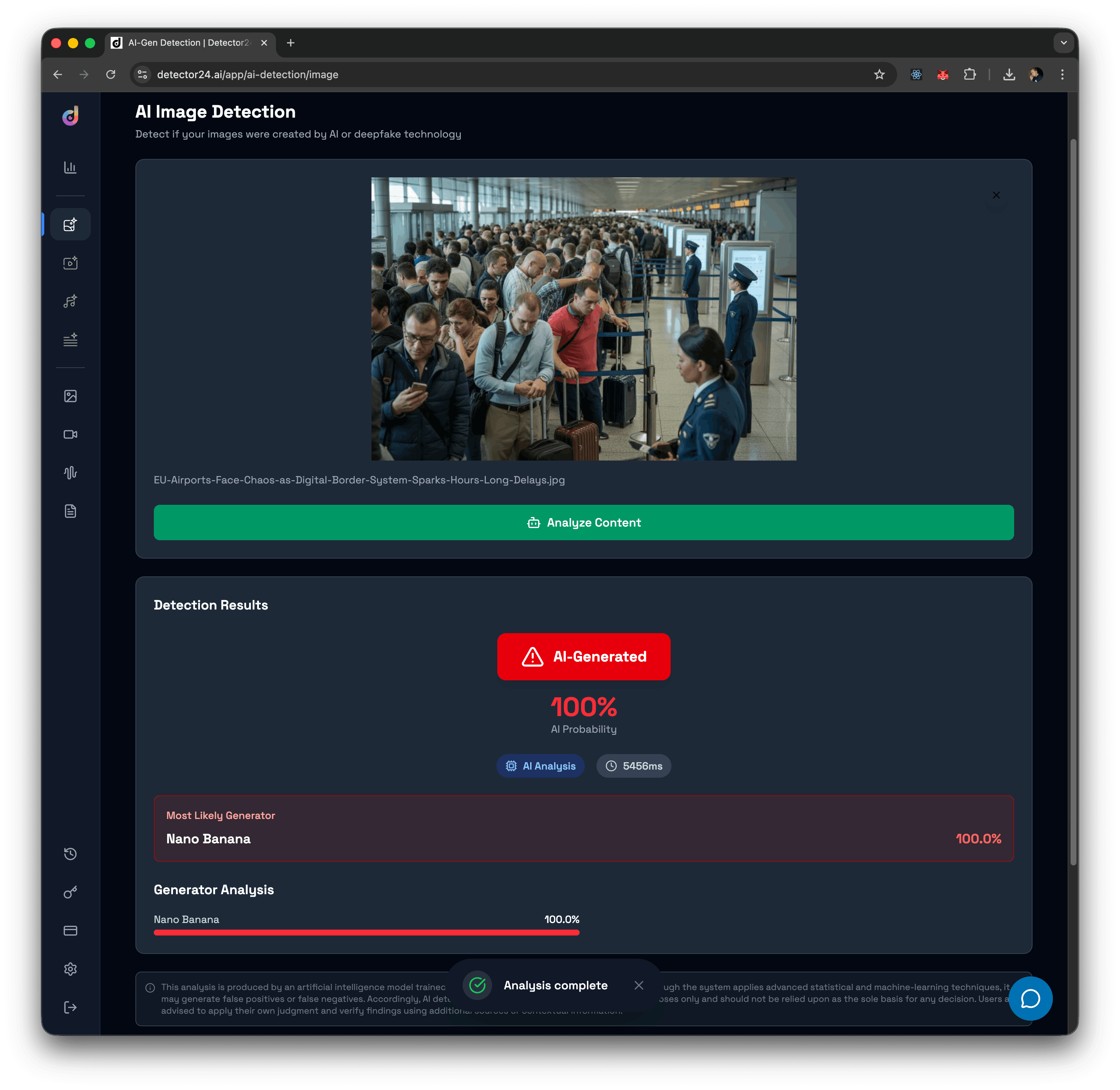

The explosion of AI-generated images has made it easier than ever for bad actors to create convincing fake profiles and fraudulent IDs. With artificial intelligence now capable of producing hyper-realistic faces, avatars, and even entire documents, identity fraud and fake insurance claims have become a growing threat across industries. This is where AI image detection steps in as a critical line of defense.

Modern AI image detectors leverage advanced algorithms and deep learning to analyze visual patterns that are often invisible to the human eye. By scanning for subtle inconsistencies—like unnatural lighting, irregular textures, or anomalies in facial features—these tools can detect AI-generated images, manipulated content, and even edited images in just a few seconds. Whether it’s a fake photo used to create a bogus social media profile or a doctored ID submitted for onboarding, an AI image checker can quickly determine if the visual content is authentic or artificially generated.

The process is straightforward: upload an image to an AI detector, and the system runs a detailed analysis using techniques such as frequency domain fingerprinting and pattern recognition. The AI image detection engine compares the image against vast training data, looking for the telltale signs of AI generation. This rapid, automated approach allows organizations to verify the originality of images at scale, making it much harder for fake profiles and identity fraud to slip through the cracks.

But the benefits of AI image detection go far beyond fraud prevention. In academic settings, these tools help maintain academic integrity by identifying AI-generated visuals in research or student submissions. For businesses, they protect intellectual property by flagging unauthorized or manipulated content. In the fight against fake news, AI image checkers help ensure that only real images are shared, reducing the spread of misleading or manipulated content.

AI image detection is also invaluable for platforms that rely on user-generated content. Social networks, e-commerce sites, and online communities can use AI tools to automatically screen profile photos, product images, and uploaded documents—catching fake profiles, fake insurance claims, and other forms of identity fraud before they cause harm. The technology is equally effective at spotting fake photos, AI-generated faces, and other types of AI-generated content, providing a robust safeguard for digital trust.

To stay ahead of increasingly sophisticated AI models, it’s essential to use high-quality training data and continually update detection algorithms. Combining AI detection tools with human review ensures the highest accuracy, allowing organizations to make informed decisions and minimize false positives. By integrating AI image detection into their workflows, businesses and individuals can confidently verify the authenticity of visual content, spot AI-generated images, and prevent the spread of manipulated or misleading visuals.

As artificial intelligence continues to advance, the need to detect AI-generated images and verify the originality of visual content will only become more urgent. AI image detection is no longer optional—it’s an essential tool for anyone looking to protect their platform, reputation, and users from the risks of fake profiles, identity fraud, and the growing tide of AI-generated content.

How AI Image Detection Works in Practice

So, how do we tackle the tsunami of AI images? Modern detection solutions rely on AI versus AI – using machine learning models to spot the subtle patterns that reveal generated content. Today’s advanced ai checker tools are designed for detecting ai content across various image file formats, providing reliable results even as AI models evolve. This model-based classification far outperforms old rule-based checks. In early days, one might’ve set up rules like “if the image has more than 5 fingers on a hand, flag it.” But as discussed, such static rules are brittle and quickly outdated. Instead, current detectors are trained on large datasets of both real and AI-generated images, learning to differentiate them via complex features humans wouldn’t even think of.

Continuous retraining is key to staying ahead. The AI generators aren’t static; new versions (Midjourney v7, etc.) and entirely new models emerge that may produce slightly different patterns. A robust detection system is not a one-and-done model – it needs a steady diet of updated training data from the latest generators and real images. Leading detection providers (like detector24) regularly retrain and fine-tune their models as new image generation techniques and styles appear. Large scale API-based detection solutions can handle billions of images per month, making them suitable for growing businesses and extensive operational demands. This ensures the detection keeps working even as AI content evolves.

Another practical aspect is confidence scoring instead of binary labels. A good AI image detector won’t just spit out “FAKE” or “REAL” – it will assign a probability or confidence level. For instance, it might determine an image is 87% likely AI-generated. Why? Because in the real world, there’s always some uncertainty, and it’s important for systems and human operators to calibrate responses accordingly. A borderline 55% result might be treated differently (e.g. sent for manual review or additional checks) whereas a 99.9% result can be actioned immediately. Embracing this probabilistic approach reduces false alarms and overconfidence. As guidance for journalists notes, the goal has shifted to probability assessment and informed judgment rather than absolute certainty. Professional detection tools can analyze suspicious media and identify deepfakes across audio, images, and videos.

Finally, scalability and integration come from an API-first approach. Detection models are deployed as services that can be called in real-time or batch, making it easy to plug into your existing workflows. AI image detection tools are utilized by journalists to verify photo authenticity before publishing and to analyze images shared on social media to avoid spreading AI-generated or misleading content.

Best Practices for Businesses in 2026

Confronting the AI-generated image challenge requires a strategic, multilayered approach. Here are some best practices for organizations to stay ahead of the curve:

- Combine visual AI detection with contextual risk analysis: Don’t silo your image analysis. Use AI detectors to screen images for synthetic signs and feed those results into your broader risk engines. For example, if an uploaded profile photo gets a high fake probability, automatically check how old the account is, whether the user provided other verified info, and if similar images were used elsewhere. This holistic view (image signals + user/device context) dramatically improves accuracy. It also helps prioritize cases – a borderline image from a user with other red flags should be treated more urgently than one from a long-trusted customer.

- Avoid relying on metadata, watermarks, or user disclosure: As we noted, invisible watermarks (like those complying with the C2PA standard) and metadata tags can be helpful, but they are easily removed or outright absent. Many AI images will arrive with no telltale metadata saying “made by DALL·E” – bad actors will strip those markers off, and screenshots or re-encodings will destroy them by accident even if no malice is involved. Likewise, do not rely on users to self-report that an image is AI-generated.

- Design escalation flows for borderline cases: Treat image authenticity checks as you would any risk scoring system – define clear thresholds and actions. For instance, if your detector gives a very high confidence that an image is AI (say 90%+), you might automatically block that content or fail that verification step. For intermediate confidence (perhaps 50–89%), route the case to a manual review or a secondary tool for further analysis. Low confidence (under 50%) might mean the image is likely real, so it passes, but you still log the result. Having this tiered approach ensures you’re neither overzealous nor laissez-faire. Importantly, equip your human reviewers with guidance on what to do with borderline cases: maybe they contact the user for additional verification (e.g. a live video call to prove identity), or they compare against known datasets (like do a reverse image search for the photo).

- Prepare for regulatory scrutiny around synthetic media: Laws and regulations are rapidly evolving to address AI-generated content. The EU’s upcoming AI Act, for example, will enforce transparency for AI-generated media and could penalize companies that distribute undisclosed deepfakes. Even outside formal laws, expectations are rising from consumers and partners that businesses will handle AI content responsibly. It’s crucial to get ahead of this by implementing and documenting your detection processes now. Develop policies for how you respond to detected AI fakes (Do you label them? Remove them? Notify users?). Keep logs of detection results and actions taken – this creates an audit trail to demonstrate due diligence. If regulators or auditors come knocking, you want to show that you have a systematic, consistent program to deal with synthetic media. Regularly review and update it, as the threat landscape changes. Being proactive here not only mitigates legal risks (avoiding fines or liability for negligence), it also positions your company as trustworthy in an era when manipulated content is a known problem.

How Detector24 Helps Detect AI-Generated Images Reliably

Navigating this new reality of AI-generated content is daunting, but solutions like Detector24 are built to meet the challenge. Detector24 is an advanced AI-powered detection platform designed specifically for real-world abuse cases of synthetic media, offering state-of-the-art ai content detection with reliable results across diverse content types. Here’s how it helps businesses and trust teams stay a step ahead:

- AI-Trained Models for Real-World Abuse: Detector24’s image detection engine is powered by machine learning models that have been trained on diverse, real-world data – not just laboratory examples. This means it’s seen the kinds of fakes criminals and bad actors actually use, from GAN-generated profile pics to diffusion-made fake documents. The models zero in on the subtle pixel patterns and artifacts discussed earlier, operating like a forensic expert that never tires.

- Generator-Agnostic Detection: One standout feature is that Detector24 takes a generator-agnostic approach. It doesn’t matter if an image was created by a well-known model (Midjourney, DALL·E, Stable Diffusion, etc.) or an obscure custom AI – the detection analyzes the image itself for authenticity signals, rather than relying on a fixed list of known model signatures.

- Scalable APIs for Easy Integration: Detector24 was built API-first, making it easy to integrate into your existing workflows. For onboarding and KYC, you can call the Detector24 API as soon as a user uploads an ID or selfie – getting an instant verdict on whether the image is genuine or suspicious. For content moderation, plug the API into your platform so that each user-uploaded image (profile photo, post, ad, etc.) is screened in real-time. And for investigations or audits, you can feed batches of images through the API or use Detector24’s dashboard to analyze historical content. Detector24 is designed for large scale operations, handling billions of images per month for enterprise clients and supporting the needs of growing businesses.

- Confidence Scoring and Detailed Insights: When Detector24 analyzes an image, it doesn’t just give a binary answer. It returns a confidence score along with rich insights. You might get a result like “95% likely AI-generated” along with indicators of why – e.g., “unusual noise patterns detected” or “inconsistent EXIF camera signature.” These details help your team understand and trust the findings. The platform analyzes specific elements of each image to verify originality and detect ai generated avatars, helping prevent the spread of misleading content such as fake profiles or impersonation attempts. You can configure threshold actions based on the score, as discussed in best practices.

- Built for Trust, Safety, and Fraud Teams: Detector24 isn’t a consumer toy or a generic AI demo – it’s built with the needs of enterprise trust & safety, compliance, and fraud prevention teams in mind. The platform emphasizes reliability and minimal false positives, so you’re not chasing ghosts. It supports compliance requirements by keeping data secure and providing the logs/reports you need. And it’s constantly updated to handle emerging threats – whether that’s a new style of AI-generated fake IDs or the latest viral deepfake technique.

In a world where AI can spin up fake content at scale, Detector24 offers the scalable, reliable countermeasure. It allows businesses to automate the detection of AI-generated images in real-time, weed out fraudulent content before it causes harm, and maintain the integrity of their platforms and processes. From preventing onboarding fraud to safeguarding your brand’s reputation, Detector24’s robust detection capabilities become an indispensable part of a modern digital trust strategy. With solutions like this, companies can embrace the benefits of AI creativity while confidently filtering out the malicious fakes – preserving trust in the visuals we see and share every day.

Want to learn more?

Explore our other articles and stay up to date with the latest in AI detection and content moderation.

Browse all articles