AI Content Moderation with Detector24: A Comprehensive Guide to Moderation Models

The Importance of Content Moderation

In today’s digital landscape, content moderation is essential for maintaining safe and positive online communities. Every minute, users upload an enormous volume of content – for example, more than 500 hours of video are added to YouTube each minute. Social networks, forums, and gaming platforms host millions of posts, images, and videos daily. Without effective moderation, harmful material such as hate speech, graphic violence, nudity, or disinformation could spread unchecked, putting users and brands at risk. Content moderation ensures that user-generated content aligns with community guidelines and legal standards, protecting users from offensive or dangerous material and preserving the platform’s reputation. Simply put, robust moderation keeps online spaces welcoming and compliant.

However, the sheer scale of user content makes moderation a colossal challenge. Relying on human moderators alone becomes impractical – no team of humans can manually review billions of daily posts in real time. Additionally, human moderation is slow and labor-intensive, often struggling to catch content fast enough to prevent harm. Problematic posts can go viral in minutes, meaning delays in removal have real consequences. Furthermore, exposing human moderators to endless streams of disturbing content takes a severe psychological toll. Moderators routinely face graphic violence, sexual exploitation, hate speech, and other distressing material, leading to stress, anxiety, and even trauma. This high-pressure, emotionally taxing work can result in burnout and errors. In summary, content moderation is vital, but manual methods alone are insufficient in the modern era of massive, 24/7 content creation.

The Rise of AI-Powered Moderation

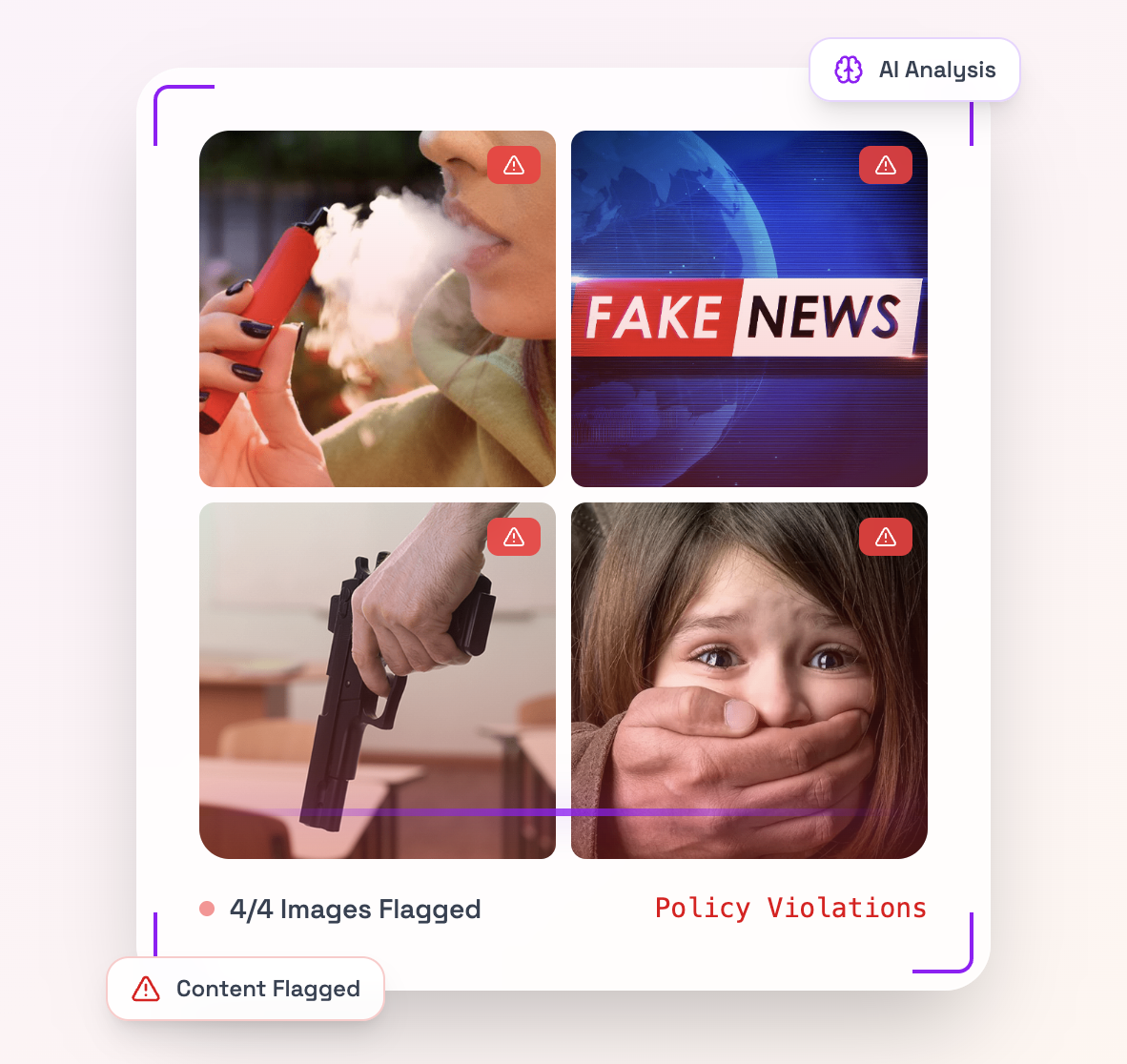

Given the limitations of manual moderation, platforms are increasingly turning to Artificial Intelligence (AI) to help tackle the content deluge. AI content moderation refers to using machine learning models to automatically review and filter user content for policy violations or safety issues. These AI systems can analyze text, images, audio, and video at scale and speed that humans simply cannot match. Major tech companies have already demonstrated the impact of AI in this domain – for instance, Facebook reported that in one quarter 95% of the hate speech removed from its platforms was first detected by AI before any user reported it. This proactive identification shows how AI can catch the vast majority of harmful content automatically.

Why is AI so effective for content moderation? AI models excel at pattern recognition and can be trained on large datasets of violative vs. acceptable content. This enables them to flag issues with a high degree of consistency and objectivity. Some key benefits of AI-powered moderation include:

- Speed and Real-Time Detection: AI systems work in milliseconds, allowing platforms to identify and remove inappropriate content almost instantly – even during live streams or chats. Quick removal prevents harmful posts from spreading widely and fosters a safer user experience.

- Scalability: Automated models can handle millions of content pieces continuously without fatigue. This scalability is critical when hundreds of hours of video and countless images/text posts are uploaded every minute.

- Consistency and Impartiality: AI algorithms apply moderation policies uniformly according to their training. They don’t get tired or emotionally influenced, so they enforce rules consistently across all content. This reduces the risk of human bias or erratic decisions and gives users a predictable, fair experience.

- Reduced Human Burden and Error: By handling the bulk of routine moderation, AI relieves human moderators from reviewing the most obvious and repetitive violations. This mitigates human error (which can occur due to fatigue or oversight) and lets moderators focus on complex edge cases that require judgment. Importantly, it also shields moderators from the worst content – fewer people have to personally see graphic violence or abuse, helping to protect moderator mental health.

- Adaptive Learning: Modern AI moderation models continuously improve by learning from new data. They can adapt quickly to evolving content trends – for example, new slang, memes, or novel forms of hate speech and tactics used by bad actors. This adaptability means AI systems can stay ahead of emerging threats better than static manual guidelines.

Of course, AI moderation is not perfect. There are challenges like false positives (flagging benign content by mistake) or false negatives (missing some violations). Achieving the right balance often requires a hybrid approach, where AI filters and flags content and human moderators review borderline cases. Nonetheless, AI has proven to be a game-changer for moderation efficiency. It allows platforms to enforce content rules at scale with unprecedented speed and accuracy, making online environments safer and more enjoyable for users.

AI Moderation Models: How They Work

AI content moderation relies on specialized machine learning models trained for various types of media and policy categories. These models use advanced techniques in computer vision, natural language processing (NLP), and audio analysis to recognize problematic content. Here’s a brief overview of how these AI moderation models function:

- Image Moderation Models: Using deep learning (e.g. convolutional neural networks), image models analyze pictures frame by frame to detect visual features that may violate policies. For example, a nudity detection model is trained on thousands of labeled images to recognize explicit nudity or sexual content, while a violence detector learns to spot blood, weapons, or fighting scenes. These models output confidence scores or labels indicating the presence of disallowed content (such as “adult content” or “graphic violence”). Advanced vision models can also understand context – for instance distinguishing a medical image or art from truly explicit content – to reduce false alarms. They often combine object detection (finding specific items like guns or drugs in the image) with scene analysis (understanding the overall scenario).

- Video Moderation Models: Video moderation extends image analysis across time. AI models process video streams frame-by-frame (or scene-by-scene) and use temporal analysis to detect issues that unfold over a sequence. For instance, a video violence detector will examine each frame for violent imagery and also track movement or escalating actions in the clip. Some models use temporal context to differentiate a quick accidental scene from sustained graphic violence. Due to the volume of frames, efficiency is key – these models leverage optimized deep learning algorithms to scan videos in near real-time. They output flags for any segment of video containing policy violations (like a timestamp where nudity or hate symbols appear).

- Audio Moderation Models: Audio-based moderation AI focuses on speech and sound analysis. A common approach is to use speech-to-text transcription combined with text moderation models – essentially converting audio to text and then scanning for profanity, hate speech, harassment, or other banned language. More advanced audio models can directly analyze acoustic patterns; for example, detecting the unique signatures of an AI-generated (cloned) voice versus a real human speaker. They can also identify distress signals in voice (such as someone expressing crisis or self-harm intent) and detect sounds related to unsafe content. Audio moderation models enable screening of voice chats, live audio streams, podcasts, or music for compliance.

- Text Moderation Models: NLP-powered moderation models evaluate written content (posts, comments, messages) for any form of policy violation. These models use techniques like transformer-based language models and classifiers to understand context and meaning. They can flag toxic language (e.g. slurs, bullying, extreme profanity), hate speech, sexual solicitations, and other disallowed text. Some models specialize in detecting specific categories like spam/fraud (phishing attempts, scams), personal data requests (to prevent sharing of private information), or misinformation. The AI is trained on large corpora of labeled examples so it learns to predict the likelihood that a given text snippet is harmful or violates guidelines. Modern text moderation AI can even handle nuances like sarcasm or coded language to an extent, and operate in multiple languages using multilingual embeddings.

These AI moderation models typically return a score or label for each piece of content, which can then trigger automated actions. For instance, if an image is classified as 98% likely to contain nudity, it can be automatically blocked or sent to human moderators for review, depending on the platform’s policy. By combining a variety of models (image, video, audio, text), a platform can achieve a comprehensive moderation system covering all content modalities. Next, we will explore Detector24’s extensive suite of AI moderation models and how they empower content moderators to keep platforms safe.

Detector24’s Comprehensive AI Moderation Model Catalog

Detector24 is a leading AI content moderation platform that offers a wide range of pre-trained moderation models covering images, videos, audio, and text. In fact, Detector24 provides a catalog of over 35+ AI-powered moderation models (spanning more than 100 content categories) to automatically analyze and filter content. This all-in-one solution enables businesses to mix and match specialized detectors to fit their community guidelines. According to Detector24, their advanced AI models can distinguish over 100 types of content – including nudity, violence, hate symbols, drugs, weapons, self-harm and more – giving complete protection for your platform. The breadth of Detector24’s model catalog means that virtually every common moderation concern is covered by an AI solution.

A key advantage of Detector24’s models is that they are context-aware and highly accurate. The system is designed to intelligently reduce false positives by understanding context. For example, the AI can tell apart an innocent beach snapshot from explicit adult content, or a prop weapon in a movie scene from a real threat, thanks to contextual intelligence. The models are continuously refined on diverse datasets to ensure high accuracy (often above 90%+) while minimizing mistaken flags. This allows automated moderation to be strict on truly harmful content yet lenient on harmless posts that might superficially appear risky. Additionally, Detector24’s platform offers real-time processing speeds – image checks often complete in a fraction of a second (e.g. 100–250ms per image) and video analysis is optimized for frame-by-frame real-time scanning. In practice, this means integrating Detector24’s API into your application can provide instantaneous content checks without noticeable delay to users.

Let’s break down Detector24’s moderation models by content type and their capabilities:

Image Moderation Models

Detector24’s image moderation suite covers all the standard NSFW and safety categories one would expect, and more. These AI vision models analyze images and return structured results indicating any detected issues. Some of the notable image moderation models include:

- Nudity & Adult Content Detection: Flags images containing nudity, sexual activities, or pornographic content. This model helps platforms automatically remove or blur explicit images to maintain a PG-13 environment. It is finely tuned to catch full nudity as well as partial nudity or sexually suggestive content, while ignoring innocuous skin exposure (like swimwear) to avoid false positives.

- Violence and Gore Detection: Detects graphic violence, blood, gore, physical fights, or abuse in images. This model enables rapid identification of violent or disturbing imagery (e.g. scenes of war or accidents) so that it can be age-restricted or reviewed before display. It’s useful for social apps, news sites, or any service needing to filter graphic content.

- Weapons Detection: Identifies weapons such as firearms, knives, or explosives in images. This is important for preventing images that glamorize violence or pose threats. For instance, an image showing a gun or a person holding a weapon can be auto-flagged by the model, allowing moderators to assess context (e.g. is it a hunter’s lawful photo or a dangerous situation?). Detector24’s models can recognize common weapon types with high accuracy.

- Hate Symbol & Extremism Detection: Beyond textual slurs, images can contain hate symbols (like swastikas, extremist group flags, or hand signs). Detector24 covers hate imagery by detecting such symbols or extremist logos embedded in pictures. This is crucial for curbing hate speech that appears in memes, graffiti, or apparel within images.

- Minor Presence & Age Detection: Specialized models can determine if an image contains minors (children) and even estimate age groups. This is valuable for enforcing age-appropriate content rules – for example, flagging images of unaccompanied minors in a platform where they shouldn’t appear, or helping detect possible child exploitation content. An age detection model (with ~91% accuracy as reported) can classify if a person appears to be a minor or adult. This assists in compliance with regulations and community standards protecting children.

- Face Occlusion and Identity Verification: Detector24 provides models like Face Occlusion Detection, which checks if a person’s face in an image is obscured (by masks, sunglasses, etc.), and Wanted Persons Detection, which performs face recognition against criminal databases (like Interpol or FBI lists). These have security and law enforcement applications – for example, ensuring an ID verification selfie isn’t with a covered face, or scanning user profile photos against known bad actors. The wanted-persons model boasts high accuracy (~97%+) in matching faces with watchlists, aiding in automated background checks.

- Image Integrity and Deepfake Detection: With AI-generated imagery on the rise, Detector24 offers AI Image Generation Detection to determine if an image has been AI-generated or manipulated. This model looks for telltale signs of synthetic images (from tools like Midjourney, DALL·E, etc.) and can signal when content might be deepfake or altered. In conjunction, an Image Deepfake Detection model specifically targets face-swap or manipulated face images (common in deepfake porn or misinformation). These tools help moderators and platforms preserve authenticity and combat visual disinformation by flagging fake images.

- Restricted Substance Detection: Several models handle age-restricted or illicit content visible in images. For example, Detector24 can detect drugs and paraphernalia (pills, syringes, cannabis plants, joints, needles) so that content involving illegal drug use can be caught. Similarly, there are models to identify alcoholic beverages (beer bottles, wine glasses, etc.) and tobacco products (cigarettes, vaping devices). While images of a wine glass or cigarette aren’t outright “banned” on many platforms, having these detectors allows platforms to enforce age gating (mark content as for adults only) or comply with advertising policies that restrict such content.

- Gambling and Money Detection: To assist with certain platform policies (for instance, social networks that disallow gambling promotion or counterfeit money sales), Detector24 includes models to detect gambling scenes (casino tables, slot machines, playing cards) and money or banknotes displayed in images. These can flag posts that encourage gambling or fraudulent money-related schemes.

- Sensitive Scene Detection: Some images might not be graphic violence but still depict sensitive scenarios, such as destruction, accidents, or natural disasters (e.g. buildings on fire, car crashes) or military combat scenes. Detector24’s catalog has detectors for Destruction & Fire and Military Scenes, which can be useful for news or user-generated content platforms to tag or review such content appropriately (for instance, to provide content warnings or limit visibility due to potentially distressing imagery).

- Text and Document Analysis in Images: Uniquely, Detector24 also offers models that analyze text within images. The Graphic Language Detection model can perform OCR (optical character recognition) on an image to read any overlaid or embedded text, and then detect if that text contains profanity, slurs, or other offensive language. This is extremely helpful for moderating memes, images of signs or screenshots of text conversations, which often accompany abusive content. Additionally, a Document Classifier model can identify types of documents in an image (passport, ID card, invoice, etc.), which is useful for verification processes (KYC) or moderation of posted images to prevent sharing of personal IDs publicly. There’s also Document Tampering Detection to spot if an uploaded document image has been photoshopped or forged, as well as Document Liveness Detection to tell if a document photo was taken live or is a screen/photo printout (to counter fraud). These latter tools blur the line between content moderation and fraud prevention, but they showcase how Detector24’s image models go beyond just flagging bad content – they also help ensure authenticity and compliance in user-uploaded imagery.

- Content Categorization and Rating: To round out image analysis, Detector24 provides content description models like Content Rating, which automatically classifies an image as PG, PG-13, or R-rated based on its nudity, violence, or suggestive content levels. This is useful for platforms needing to label content for age-appropriateness or implement parental controls. Another model detects objects like vehicles (cars, trucks, bicycles) in images – while not a “moderation” need per se, Vehicle Detection can enrich content metadata or be used to filter images (for example, a marketplace could automatically moderate listings into categories if a car is detected).

Overall, Detector24’s image moderation models allow for extremely granular control. Platforms can deploy the specific detectors relevant to their community guidelines. For instance, a family-friendly social app might use nudity, violence, and profanity-in-image detectors, while a fintech app might use document fraud detectors. All image models can work together to scan an upload and return a comprehensive report of any issues present. With fast API responses (often under a few hundred milliseconds per image) and high accuracy, these models dramatically reduce the workload on human moderators by filtering out the bulk of problematic images automatically.

Video Moderation Models

Video content poses a unique challenge because it’s essentially a stream of images (frames) along with audio. Detector24 addresses this with dedicated video moderation models that incorporate frame-by-frame analysis and temporal pattern recognition. The platform’s video models include counterparts to many of the image detectors, adapted for motion content. Key video moderation capabilities are:

- Video Nudity & Violence Detection: These models extend the image-based nudity and violence detectors into the video domain, scanning each frame for inappropriate visual content. The Violence Detection (Video) model can pick up on violent scenes in a video clip, while ignoring flashes of inappropriate content that might be momentary or accidental. By analyzing sequences of frames, the AI can detect sustained violence or sexual content even if it’s briefly obscured. Detector24’s video nudity/violence detectors allow moderation of user-generated videos, live streams, or archived footage, ensuring that graphic or explicit scenes are caught for review. Impressively, the system processes video in near real-time, meaning it can potentially be used on live streams to flag or take down streams as violations occur.

- Deepfake Video Detection: Just as with images, videos can be deepfaked (e.g. swapping someone’s face in a video). Detector24 offers a Video Deepfake Detection model that uses state-of-the-art algorithms (BYNN SOTA model, as noted) to detect manipulated faces in video content. With extremely high accuracy (~99.9% as reported), this helps identify deepfake videos or any AI-generated face swaps, which is increasingly important for preventing misinformation or non-consensual explicit videos.

- Face Recognition & Liveness in Video: The Wanted Persons Detection (Video) extends the face recognition to moving footage, allowing real-time identification of known individuals on watchlists in video streams (such as CCTV or live broadcast). It can scan each frame for faces and cross-reference against a database of mugshots with high precision (97%+ accuracy). Similarly, Face Liveness Detection in video is used for biometric authentication scenarios – ensuring that a person on camera is physically present (not a video replay or mask) by analyzing blinking, facial movements, and challenge-response actions. While these are specialized use cases, they demonstrate the robust video analysis capabilities in Detector24’s arsenal that go beyond simple content filtering.

- Content Rating for Videos: As with images, Detector24 can rate a video’s content as PG/PG-13/R by evaluating the cumulative presence of nudity, violence, or harsh language throughout the footage. This helps platforms automatically tag or restrict videos based on age suitability. A Content Rating (Video) model can save moderators significant time by auto-labeling most videos, with only edge cases requiring manual review.

- Comprehensive Moderation of Audio-Visual Streams: Detector24’s video moderation models aren’t limited to visuals – they can be combined with audio moderation (discussed next) for a full picture. For example, a video might not show violence but the audio has hate speech – with Detector24, the platform can detect both aspects. The video models perform scene detection and temporal analysis, meaning they recognize scene changes and context in the video, which improves accuracy (e.g., understanding if a violent scene is part of a news documentary versus a gratuitous clip). All this happens efficiently; Detector24 advertises real-time or near-real-time processing even for video, which is critical for moderating live content or very large volumes of videos.

Using Detector24’s video moderation, content moderators can confidently handle user-uploaded videos on their platform. The AI will flag segments of videos that need attention (with timestamps and labels for the type of violation), rather than moderators having to watch hours of footage blindly. This significantly streamlines the moderation workflow for video content – moderators only review the specific moments AI has identified, or they can quickly approve videos with no flags. This makes it feasible for platforms to uphold community standards even as video content explodes in popularity online.

Audio Moderation Models

Audio content – whether in the form of voice chats, calls, music uploads, or podcasts – is another frontier for content safety. Detector24 provides audio moderation models that leverage signal processing and NLP to analyze audio streams. Notable audio-focused models include:

- Voice Safety (Audio) Detection: This model listens for unsafe or abusive language in audio. It can detect harassment, hate speech, extreme profanity, discrimination, or even criminal activity references spoken in an audio stream. Essentially, it’s like having a virtual moderator listening to voice chats or spoken content and flagging anything that violates community guidelines. This is vital for moderating live audio rooms, in-game voice chat, or any scenario where users communicate verbally. The model likely works by transcribing speech to text and then applying a text moderation filter, possibly augmented by acoustic analysis for tone. With Detector24’s multi-language NLP capabilities, this audio model can handle profanity/hate in multiple languages, making it very useful for global platforms.

- AI-Generated Music Detection: A rather specialized but forward-looking model, this detector can identify if a piece of music was generated by AI. As generative AI music tools proliferate, this model helps with copyright and authenticity – for instance, a platform can flag AI-composed music if it only wants human-created content, or to monitor potential copyright evasion via AI music. It uses audio pattern analysis to distinguish AI-composed audio from real recordings (with roughly ~89% accuracy as per snippet).

- Voice Deepfake Detection: Similar to image/video deepfakes, voices can be cloned by AI. Detector24’s Voice Deepfake Detection model analyzes audio to determine if a voice is likely synthesized or impersonated by AI (versus a recording of a real person’s voice). This is crucial for preventing impersonation scams, fake audio evidence, or illegitimate access (imagine AI-generated audio trying to bypass a voice-based verification). The model looks at subtle features of speech that AI often struggles with, helping to catch cloned voices or text-to-speech outputs. As an example application, a communication platform might use this to label calls or voice messages that are not from a live person.

- Other Audio Content Filters: While not explicitly listed in the snippet, generally an audio moderation suite could also include detection of sounds like gunshots or screams (for safety alerts), or identifying copyrighted music in streams. Detector24’s focus, however, seems primarily on voice and music AI aspects for now. Nonetheless, by combining the above models, a platform can automatically moderate audio content – e.g., moderate a live audio chat by flagging users who use slurs or by detecting if someone is playing AI voice clips or certain music in the channel that should not be allowed.

With audio models in place, content moderators don’t need to manually listen to every conversation or audio upload. The AI can transcribe and scan speech instantly, enforcing the same standards that would apply to text. This greatly enhances user safety in voice-based communities and keeps interactions civil. It’s part of Detector24’s goal of covering all content modalities: wherever users may encounter harmful content (be it written, visual, or spoken), an AI model is available to help moderate it.

Text Moderation Models

Text is the oldest and most pervasive form of user content, ranging from social media posts and comments to chat messages and forum discussions. Detector24’s platform includes robust text moderation models that utilize advanced NLP for understanding and classifying text. These models can operate in real time on large volumes of text, catching problematic content before it reaches other users. Key text-based moderation models in Detector24’s catalog include:

- Toxicity and Hate Speech Detection: While not explicitly named in the snippet, any comprehensive moderation suite will have models to detect graphic or abusive language (profanity, slurs, harassment) in text. Detector24 does mention Graphic Language Detection (likely for OCR on images), but for pure text, similar profanity filters and hate speech classifiers are essential. These models scan user text for any form of harassment, bullying, threats, or derogatory language towards protected groups. By flagging high-risk messages, platforms can auto-moderate toxic comments and foster a healthier community dialogue.

- Mental Health & Self-Harm Detection: One of Detector24’s specialized text models is for mental health signals. This AI can detect when a user’s text indicates anxiety, depression, or crisis (e.g. mentions of self-harm, suicidal thoughts, extreme hopelessness). With 99.9% reported accuracy, the model can serve a critical safety function – identifying users who may be in need of help or intervention. Platforms concerned about user well-being (such as forums, social networks, or chat services) can use this to proactively flag posts that suggest someone is in crisis, enabling moderators to respond with resources or support rather than punitive action.

- Misinformation & Fake News Detection: In the era of rampant misinformation, Detector24 provides a Fake News Detection model for text content. It attempts to identify when a piece of text (like a post or article) contains false or misleading information. Likely using a combination of keywords, sentiment, and possibly external fact databases or model knowledge, it gives a probability that the content is disinformation. While no AI can be 100% perfect in judging truth, this model can be a helpful first-pass filter to flag potentially dubious content for fact-checking. It helps moderators slow the spread of viral fake news by catching it early.

- PII and Solicitation Detection: Personal Identifiable Information (PII) sharing or solicitation is another category addressed by Detector24’s text models. The PII Solicitation Detection model flags when users ask for or share sensitive personal data (like someone requesting another user’s email, phone number, address, or sending their own). This is particularly important on platforms with minors (to prevent grooming or privacy breaches) and generally for user safety and privacy compliance. The model, with ~99.5% accuracy, can catch phrases like “DM me your number” or “what’s your address?” and alert moderators or auto-delete the message before any unsafe exchange happens.

- Fraud and Spam Detection: Detector24’s Fraud Text Detection model focuses on catching scams, phishing attempts, and other fraudulent messages. Using pattern recognition, it can identify common scam phrases (e.g. “Congratulations, you’ve won a prize, click here...”) or phishing layouts, as well as more subtle social engineering attempts. With nearly 99.9% accuracy, it serves as a powerful spam filter to keep your platform free of scammers. This helps protect users from financial fraud and keeps the community experience clean. It can work hand-in-hand with traditional spam detection techniques by adding an intelligent AI layer.

- AI-Generated Text Detection: With AI systems like GPT-4 being used to generate content, some platforms may want to discern AI-written text from human-written. Detector24 offers an AI-Generated Text Detection model that analyzes text to predict if it was produced by AI language models. This model (around 93-94% accuracy) could help moderators identify bot-generated reviews, fake accounts posting AI-written comments, or detect AI use where it’s not allowed (e.g., AI-generated essays in a learning platform). While AI text detection is an evolving field, having this tool in the toolbox is valuable for content integrity and spotting inauthentic behavior.

- Advanced Sentiment Analysis: Lastly, Detector24 includes an Advanced Sentiment Analysis model for text, boasting very high accuracy (~98.8%). This isn’t directly about policy violations, but sentiment analysis can gauge the emotional tone of content (positive, negative, neutral). For moderation, sentiment analysis can provide context – e.g., extremely negative or angry comments might merit a closer look even if they don’t contain explicit profanity. It can also be used to measure community health generally. By analyzing sentiment trends, moderators or community managers get insights into user satisfaction or conflict areas. In some cases, sentiment detection combined with toxicity detection can prioritize which toxic comments are most likely to escalate (for example, a very negative, highly emotional post might need urgent moderation attention).

With these text models, Detector24 enables real-time automated moderation of chat messages, comments, forum posts, and any other text content. The models can act as a first line of defense – auto-filtering obvious spam or slurs, deleting posts that share private info, and flagging borderline content for human review. This significantly improves moderation efficiency. As one industry analysis notes, AI-powered moderation filters and prioritizes content, reducing the workload and stress on human moderators. Moderators can then spend their time evaluating the nuanced cases (for example, determining if a reported insult was contextually acceptable or truly harmful) instead of wading through hundreds of blatant rule violations. Overall, the text models ensure that online discussions remain civil, accurate, and safe, while empowering moderators to manage large communities without being overwhelmed.

Bringing It All Together for Moderators

Individually, each of the above AI models addresses a specific facet of content moderation. The real power of Detector24’s platform comes from combining these models to cover content holistically. Content moderation rarely involves just one type of content – a single user post might include an image, an embedded video, and text caption. Detector24 allows for multi-modal analysis: scanning the image for visual issues, the attached text for language issues, and any audio/video for their respective issues, all in one go. The results give a 360-degree view of the content’s compliance.

For content moderators and trust & safety teams, deploying Detector24’s AI models means a lot of heavy lifting is handled automatically. The AI can flag potentially violative content within seconds of upload (or even pre-publication), preventing many users from ever seeing it. It also prioritizes the moderator queue by severity – e.g., truly egregious content (violence, sexual abuse imagery, explicit hate speech) can be auto-removed or highlighted for immediate human review, while minor issues can be queued normally. This ensures human moderators spend time where it matters most, improving overall enforcement quality. Moreover, consistent AI filtering leads to a fairer environment: users know the rules are enforced uniformly, which builds trust in the platform.

Another major benefit of incorporating AI moderation is the protection of moderators’ mental health. Instead of manually sorting through the worst content, moderators see a pre-filtered set (with the most horrific material often blocked outright by AI). As experts have noted, leveraging AI automation minimizes moderators’ exposure to the most harmful material. This not only prevents psychological burnout but also allows moderators to be more productive and engaged in making thoughtful policy decisions on borderline cases. In essence, AI acts as a safety buffer and an assistant, doing the tedious and traumatic parts of the job so humans can focus on nuanced judgement and community management.

Detector24’s platform is built with easy integration in mind, offering SDKs and APIs so that companies can plug these models into their products quickly. Whether you run a social network, a marketplace, a gaming community, or a content hosting service, you can customize which models to use based on your needs. The moderation rules are customizable too – you can set thresholds for flagging vs. auto-removal, define custom lists (e.g., additional keywords or image patterns you consider unacceptable), and adapt the AI output to your specific community standards. This flexibility ensures the AI moderation pipeline aligns with your platform’s values and legal requirements.

Conclusion

As user-generated content continues to grow exponentially, AI content moderation has become indispensable for keeping online platforms safe, inclusive, and in compliance with laws. Detector24’s extensive catalog of AI moderation models exemplifies how technology can empower content moderators and scale up the moderation process. By utilizing advanced models for images, videos, audio, and text, platforms can automatically detect everything from nudity and violence to hate symbols, scams, and deepfakes with remarkable speed and accuracy. The result is a more proactive moderation strategy: harmful content is flagged or removed before it can harm users or communities, and human moderators are supported rather than replaced, allowing them to make better decisions on the tough calls.

In this comprehensive look at Detector24’s offerings, we saw that each model targets a critical area of content risk – together, they form a robust shield against abuse and policy violations. Importantly, these AI tools also bring consistency and efficiency, applying moderation standards uniformly across millions of posts and vastly reducing the workload and emotional strain on human teams. Industry reports have shown that AI moderation improves response times and consistency, thereby enhancing user experience and trust on the platform. Early detection of problems means users are less likely to encounter toxic or dangerous content, which in turn fosters a positive community atmosphere.

For organizations looking to “power up” their content moderation, Detector24 provides a proven, technical solution. Its models are technical powerhouses under the hood – using state-of-the-art deep learning and continuously learning algorithms – but they come in an accessible package that content teams can easily deploy. Embracing such AI moderation tools is not just about efficiency; it’s about responsibility. It shows your commitment to safeguarding users and upholding community standards at scale. By integrating Detector24’s AI moderation models, platforms can confidently grow and engage their user base without compromising on safety or quality of content.

In summary, AI content moderation is a game-changing approach to managing online content in 2025 and beyond. The importance of moderation cannot be overstated, and with solutions like Detector24’s model catalog, any organization can leverage cutting-edge AI to keep their platform clean, compliant, and user-friendly. The future of content moderation will undoubtedly involve humans and AI working hand-in-hand – and with the help of comprehensive AI model suites, we can ensure that future is a safer one for everyone online.

Want to learn more?

Explore our other articles and stay up to date with the latest in AI detection and content moderation.

Browse all articles