Image Moderation —

Detect & Filter

Unwanted Images

Moderate user-generated images efficiently and in real time with Detector24's image moderation tool and API. Protect brand sponsors and customers from exposure to harmful and unsafe content by leveraging advanced technology and media analysis to moderate a large number of incoming images and user generated content. Automated image moderation can process large amounts of data in real time, making it highly efficient for high-traffic platforms. Image moderation is essential for protecting individuals, communities, and maintaining standards across all digital spaces. Automatically detect and filter out unwanted content using our advanced AI models. Image moderation is effortless with Detector24 – flag nudity, violence, hate symbols, and other inappropriate imagery before it ever reaches your community.

Have questions? Talk to our sales team.

Comprehensive Content Detection

Powered by advanced AI models trained on millions of images

Detect explicit & adult content

Identify nudity, sexual content, and inappropriate imagery with high accuracy. Our models detect subtle variations and contextual nuances to minimize false positives.

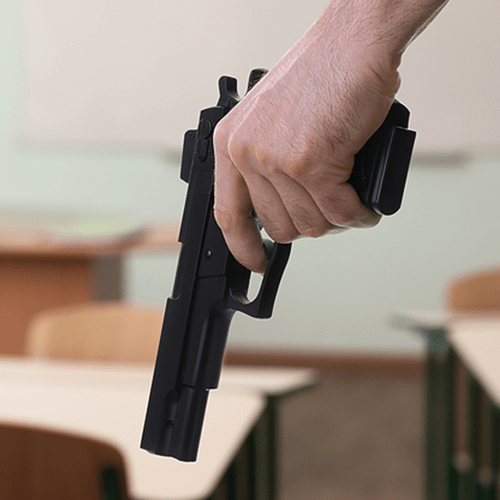

Flag violent & dangerous content

Detect weapons, gore, violence, and threatening imagery. Protect your platform from harmful content that violates community guidelines and regulations.

Lightning-fast processing at scale

Process thousands of images per second with our globally distributed infrastructure. Scale effortlessly from startup to enterprise with 99.9% uptime SLA.

How it works

Three simple steps to moderate your images

Upload via API

Send images to our API endpoint via URL or base64. Support for all common image formats including JPG, PNG, GIF, and WebP.

AI Analysis

Our models analyze the image across 25+ categories including NSFW, violence, hate symbols, and more in under 150ms.

Get Results

Receive detailed results with confidence scores for each category. Take action automatically based on thresholds you configure.

Built for every platform

Trusted by social networks, marketplaces, and UGC platforms

Social Media

Moderate user-generated content at scale

Marketplaces

Ensure product images meet guidelines

Dating Apps

Create safe environments for users

Content Platforms

Filter uploads in real-time

How Detector 24s Image Moderation Works

Modern image moderation relies on artificial intelligence and machine learning to analyze images and identify potential violations of content policies. The process typically begins with an API call to a content moderation service, which processes the image and returns confidence scores for various types of inappropriate content. These confidence scores help determine whether an image should be approved, blocked, or flagged for further human intervention. Detector24s image moderation APIs can be tailored with custom rules and thresholds to meet the unique needs of different industries and brands.

Instant Analysis

Submit images via simple API calls. Our distributed infrastructure processes requests in under 150ms with 99.9% uptime.

AI-Powered Detection

Advanced neural networks trained on millions of images identify inappropriate content with industry-leading accuracy.

Actionable Results

Receive detailed confidence scores for each category, enabling you to automate moderation decisions with precision.

Trusted by Innovative Platforms Worldwide

Leading social networks, marketplaces, and online communities rely on Detector24 to keep their visual content safe. Detector24 delivers value to its customers by providing accurate and fast image moderation, ensuring a safe and compliant platform experience that meets customer expectations. After evaluating other solutions, our clients consistently praise Detector24's superior accuracy and ease of integration, which are comparable to other leading solutions like Sightengine—known for its quick integration and accuracy. In fact, many report that our AI models "outperform other solutions" in both precision and speed while being simple to plug into existing workflows. Detector24 has become a key growth partner for innovative platforms that demand reliable, real-time image moderation.

Comprehensive Content Coverage for Image Moderation

Detector24's moderation toolkit covers 50+ content categories across every major risk area. Built on deep expertise in computer vision and Trust & Safety, our AI models use object detection and advanced AI to moderate images and pictures, including the ability to moderate text and text content through text detection and OCR. This allows you to efficiently moderate images and moderate text, ensuring that both visual and embedded text content are compliant with your platform's standards. Our image moderation tools provide confidence scores to indicate the likelihood of inappropriate content, and can identify and filter graphic violence, hate symbols, and offensive gestures. If you have a unique requirement, we can even develop custom detection models to meet your needs. Here are some of the content categories our platform can automatically detect and filter:

Adult Content & Nudity

Identify NSFW adult content and classify it into various levels of nudity or sexual suggestiveness. The API can detect degrees of nudity, from partial to explicit, and provide confidence percentages for each image.

Violence

Detect graphic or disturbing violence in images, such as physical assaults, weapons in use, gore, or executions.

Hate Symbols & Offensive Gestures

Recognize hateful, extremist, or offensive imagery and gestures.

Gore & Disturbing Imagery

Spot extremely graphic or horrific content involving blood, injury, or death. The system can also detect self harm and classify images related to physical harm, injuries, or suicide.

Weapons

Classify the presence of weapons like firearms or knives and even discern context. The tool can classify firearms and weapons into multiple subclasses based on their usage context, such as holstered, being held, aimed at someone, or used in a threatening manner.

Drugs (Recreational & Medical)

Detect images showing illicit drug use or paraphernalia as well as improper displays of medications. The tool can detect both recreational and medical drugs.

Gambling & Money-Related Content

Identify scenes of gambling activities or money which might violate platform rules.

Alcohol & Tobacco

Flag images of alcohol consumption or tobacco use, which might be restricted (especially for underage audiences).

Minor & Personal Safety

Detect the presence of people and identify if a minor (child) is in the image – even if their face is not fully visible. This helps you apply stricter rules to content involving children.

Image Quality & Attributes

Evaluate technical attributes of images that might affect moderation or user experience. Detector24 can assess an image's quality (blurriness or sharpness), brightness, predominant colors, and even determine the image type (graphic, illustration, real photo, screenshot, etc.). This can help flag low-quality content or content that needs special handling (like screenshots).

Text & Codes in Images

Read text embedded in images using OCR and scan for policy violations within that text. Text detection and text content analysis are performed to identify inappropriate or sensitive material. We detect overlaid or meme text as well as QR codes.

Custom Lists

After detection, custom lists can be used to block repeatedly flagged or offensive images, streamlining moderation efforts and reducing costs by preventing duplicate analysis.

AI Generation and Editing

Detect AI-generated images, deepfakes, and manipulated content. Combat misinformation and ensure content authenticity.

Fraud Detection

Identify scammers, fake profiles, and fraudulent imagery. Protect your platform from malicious actors and impersonation.

Note: Detector24's ever-expanding library of models means if an unwanted content type exists, we likely detect it – and if not, we'll work with you to train a solution.

Industry-Specific Use Cases

Image moderation is vital across a wide range of industries, each with its own unique challenges and requirements. Social media platforms depend on robust moderation to quickly detect and remove graphic violence, hate speech, and explicit content, helping to foster safe and inclusive communities. Online marketplaces use image moderation to prevent the listing of counterfeit products and illegal items, ensuring trust and compliance for buyers and sellers alike. Gaming platforms rely on image moderation to keep their communities free from harassment, hate speech, and offensive images, creating a positive experience for players. Content-sharing services also use image moderation to identify and remove explicit content, including nudity and violence, to protect viewers and uphold community standards. In each case, effective moderation is key to maintaining a safe, engaging, and compliant environment for all users.

Social Media

Moderate millions of user uploads daily. Real-time filtering ensures community guidelines are enforced automatically.

Marketplaces

Ensure product images meet quality standards and policies. Block prohibited items and fraudulent listings.

Gaming

Moderate player-generated content including avatars, screenshots, and in-game uploads. Create safe gaming environments.

Content-Sharing

Filter thumbnails and preview images for video platforms. Protect viewers from inappropriate or disturbing content.

Computer Vision and Image Moderation

Computer vision is at the heart of modern image moderation, enabling automated systems to interpret and understand the visual content of images and videos. By applying advanced algorithms, computer vision can detect objects, scenes, and activities that may indicate inappropriate content, such as weapons, graphic violence, or explicit imagery. Optical character recognition (OCR) further enhances moderation by extracting and analyzing text embedded within images, making it possible to identify hate speech, explicit language, or other policy violations that appear as text. When combined with machine learning, these technologies allow image moderation APIs to deliver high accuracy and efficiency, minimizing the need for human reviewers and reducing the risk of both false positives and missed violations. This powerful combination ensures that unwanted content is quickly identified and filtered, helping platforms maintain a safe and respectful environment for their users.

Easy Configuration to Fit Your Needs

No two communities are the same. Detector24 lets you configure the models to fit your custom needs in just a few clicks. You can create custom moderation workflows or processes tailored to your platform's requirements, ensuring trust and safety for your users. Pick and choose which categories to moderate and define what "inappropriate" means for your platform. You have fine-grained control over all our detection modules – you decide exactly what to filter out or flag for review.

For example, you can enable very specific sub-categories: want to allow images of weapons in a historical context but block images of guns being aimed at people? Simply adjust your settings. Only want to flag the most extreme hate symbols while tolerating mild gestures? You're in control. Our interface and API give you toggles for each subclass of content, so you can tailor moderation to match your community guidelines perfectly.

Custom Thresholds

Set confidence score thresholds for automatic approval, flagging, or blocking

Model Selection

Choose which detection models to apply based on your content policies

Automated Actions

Configure automatic actions based on moderation results

Granular Rules and Custom Thresholds

Highly customizable means you aren't stuck with a one-size-fits-all filter. Detector24 allows you to set your own confidence thresholds and moderation rules as part of a flexible moderation process. Different platforms have different standards – we empower you to enforce yours. Easily define what score triggers a block or a review, with confidence scores used to determine which images are marked as inappropriate content.

For instance, you might choose to automatically block content if our nudity model is >75% confident the image contains pornography, allow content that scores below 50%, and flag for human review anything in between. Flagged images are then grouped into smaller subsets, allowing human reviewers to efficiently analyze manageable portions of content as part of the overall moderation process. You can fine-tune thresholds for each category (nudity, violence, etc.) to strike the right balance between catching bad content and avoiding false positives. All of these rules can be configured via our dashboard or adjusted on the fly through the API.

Thanks to our expressive JSON results, it's straightforward to integrate Detector24's output into your backend logic. Every API response clearly indicates the probability of each content category (e.g. adult, violent, hateful content percentages) so your system can take automatic action based on the rules you set. And if you ever need to tweak a threshold or add a new rule, you can do it dynamically in your code or through our dashboard – no redeployment needed.

High Accuracy, Real-Time Results

Your users expect their content to be moderated correctly and immediately – not eventually. Detector24 delivers on both fronts with best-in-class accuracy and lightning-fast performance. We have trained specialized AI models specifically for image moderation, using vast amounts of real-world data. This focus shows in our results:

Industry-Leading Accuracy: In evaluations, Detector24's classifiers consistently achieve over 99% accuracy in detecting explicit adult content, with similarly high performance on other categories. Our advanced models minimize false negatives and false positives, so your users are protected from truly harmful imagery while safe content isn't wrongly taken down. We continuously monitor and improve our algorithms to maintain this standard of excellence.

Real-Time Processing: Speed is critical for automated moderation. Detector24's infrastructure processes images in a matter of milliseconds (often ~200ms per image for common checks). Incoming images are evaluated as they are uploaded, ensuring immediate moderation and instant yes/no decisions on content. The performance of image moderation APIs can vary depending on the specificity of the data used to train the models, and Detector24 leverages highly specific, real-world datasets for optimal results. Automated content assessment uses machine learning to compare images against labeled data, enabling accurate predictions and reliable moderation outcomes. There are no sluggish moderation queues – as soon as an image is uploaded, it's analyzed on the fly. Our API is synchronous and optimized for low-latency, so you don't have to build complex asynchronous pipelines or wait on callback notifications. Your app or website can confidently display user content knowing Detector24 has already vetted it in the background by the time it appears.

Our commitment to both accuracy and speed ensures a smooth user experience on your platform – inappropriate images are caught with pinpoint precision before they do harm, and legitimate content flows without unnecessary delay.

Best Practices for Image Moderation

To achieve the best results in image moderation, it's important to follow proven best practices. Start by leveraging Detector24s image moderation APIs to increase coverage and accuracy, and customize moderation rules and thresholds to align with your platform's specific content policies and user expectations. Regularly monitor and evaluate the performance of your moderation models, making adjustments as needed to address new types of inappropriate content or emerging threats. Adopting a hybrid approach—combining automated moderation with human intervention for edge cases—can further enhance accuracy and reduce the likelihood of false positives. Finally, Detector24 keeps the moderation models and algorithms up to date to ensure ongoing protection for your users and brand. By implementing these strategies, online communities and content-sharing services can effectively protect their users, maintain compliance, and build a trustworthy, positive brand reputation.

Start with Conservative Thresholds

Begin with lower confidence thresholds and adjust based on your platform's tolerance for false positives vs. false negatives.

Combine Multiple Models

Use multiple detection models together for comprehensive coverage. Layered detection reduces the risk of missed violations.

Monitor and Iterate

Regularly review flagged content and adjust your rules. Continuous optimization improves accuracy over time.

Tailor to Your Audience

Customize moderation rules based on your platform's specific audience, content types, and community guidelines.

Enable Real-Time Filtering

Integrate moderation at upload time to prevent inappropriate content from ever reaching your platform.

Balance Automation with Human Review

Use AI for initial filtering and flag edge cases for human moderators to maintain quality and fairness.

Global Infrastructure and Compliance

Detector24 is built to serve a global user base with minimal delay and maximum data security. We offer worldwide coverage through a distributed cloud infrastructure, meaning wherever your users are, our moderation API responds quickly. You can choose the region where your images get processed, helping you optimize latency and comply with data localization laws. For example, if your business operates in Europe, you can select a European data center to process images in-region and meet GDPR requirements. Operating in North America or Asia-Pacific? We have servers there too. This flexible deployment ensures that using Detector24 won't conflict with your compliance needs or slow down your app – it's fast and lawful everywhere you operate.

Multi-Region Deployment

API endpoints deployed across North America, Europe, and Asia-Pacific for minimal latency worldwide.

GDPR Compliant

Full compliance with GDPR, CCPA, and other data protection regulations. Data residency options available.

Data Localization

Choose where your data is processed and stored. Meet regional compliance requirements effortlessly.

Quick and Developer-Friendly Integration

Getting started with Detector24 is a breeze. Our API was designed by developers, for developers – we've made it as simple and intuitive as possible. Detector24's image moderation API allows developers to integrate moderation features directly into their applications without the need to build in-house solutions. Unlike some providers, Detector24 does not require expensive licenses nor do we charge ridiculous prices, making it a cost-effective and flexible choice for businesses of any size. Whether you're building a mobile app, a web platform, or an enterprise system, you can integrate Detector24 in minutes. We provide clear documentation, quick-start guides, and SDKs for popular languages so you don't have to reinvent the wheel. If you ever hit a snag, our responsive support team is ready to help.

Simple API Call: It takes only one API call to analyze an image. For instance, you can check an image via curl. The models parameter tells Detector24 which types of content to scan for (nudity, violence, hate, text in this case – you can specify any set of categories you need). The API will return a JSON response with detailed results for each requested model. You can just as easily use our client libraries in Python, JavaScript, PHP, and other languages to integrate this functionality into your codebase with a few lines of code.

From generating an API key to seeing your first moderated image, the process is quick and straightforward. We also offer a web console and demo sandbox if you want to experiment with image moderation before writing any code. Leading services like Amazon Rekognition automate image moderation workflows using machine learning, even for those without ML experience.

curl -X POST https://api.bynn.com/v1/moderation/image \

-H "Authorization: Bearer mod_sk_live_xxxxxxxxxxxxx" \

-H "Content-Type: application/json" \

-d '{

"model": "nudity-detection",

"image_url": "https://example.com/image.jpg"

}'RESTful API

Simple HTTP endpoints with JSON responses

SDKs Available

Official libraries for Python, Node.js, PHP, Ruby

Quick Start

Get up and running in under 5 minutes

Scales with You as Your Business Grows

Whether you're moderating dozens of images a day or billions of images a month, Detector24 can handle it. Our cloud-native platform scales automatically to meet your volume, so you'll never outgrow our solution. As your user base and content volume increase, Detector24 ramps up seamlessly – you won't experience slow-downs or backlogs in moderation. This means you can focus on growing your community or app features, knowing that content safety will keep pace. From a small startup to a global enterprise, we've got the capacity to moderate your images in real time. Scale is practically unlimited, and our pricing plans accommodate everything from low volumes to massive workloads cost-effectively.

Auto-Scaling

Infrastructure automatically scales to match demand

Unlimited Requests

No hard limits on API calls or throughput

Pay As You Grow

Pricing that scales with usage, no surprises

Privacy and Security First

We understand that user content can be sensitive. Detector24 is designed with privacy in mind – there are no human reviewers in the loop. Unlike some moderation approaches that rely on outsourcing to human moderators (which can risk exposing private user photos or data), Detector24's AI handles everything automatically. Your users' photos remain confidential: images are processed by our algorithms and not stored or seen by any human. We transmit and store data securely, using encryption and following industry best practices for data protection. After analysis, we only keep the minimal data needed for reporting and model improvement, so you maintain control over your content. In short, your photos stay private – just the way your users expect it to be on a trustworthy platform.

End-to-End Encryption

All data transmitted over TLS 1.3. Images are encrypted at rest and in transit.

No Human Reviewers

Fully automated AI moderation. Your images are never seen by human moderators.

Automatic Data Deletion

Images are automatically deleted after analysis. No long-term storage of your content.

SOC 2 Compliant

Independently audited security controls and compliance certifications.

With Detector24, image moderation truly becomes easy, accurate, and instant. You get peace of mind that your platform is free from harmful visuals, and your users get a safe, positive experience. All of this is achieved with the power of advanced AI – working behind the scenes 24/7 to protect your community.

Ready to experience effortless image moderation? Reach out to our team or sign up for a free trial of Detector24's image moderation API, and see how we can help you build a safer platform today.

Ready to start moderating?

Get started with our free tier. No credit card required.